In a previous blog, I showed that dual Mode is really a very good pattern when building PowerBI Model that uses Direct Query, but it in order to work, both Tables needs to be using the same Data Source, you can’t physically join a table from a SQL Server with another Table from Excel, But still PowerBI Engine manage to do that using a clever trick, to explain how it works, I build two Models one using Dual Mode and Another using Composite Model and then we compare the behavior.

Note : Kasper that a great video explaining how everything works behind the scene.

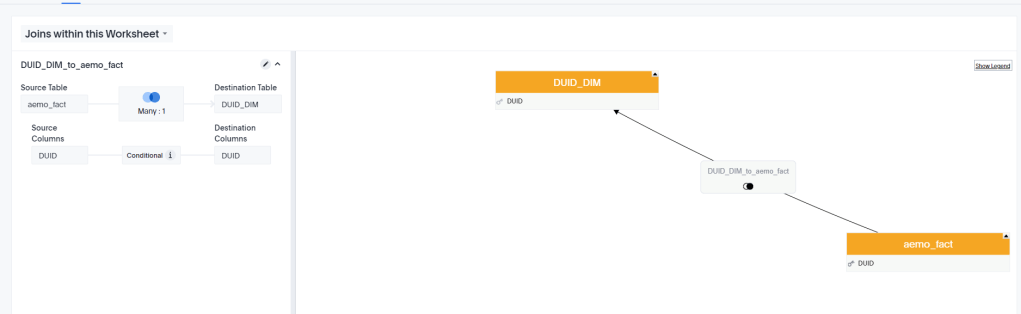

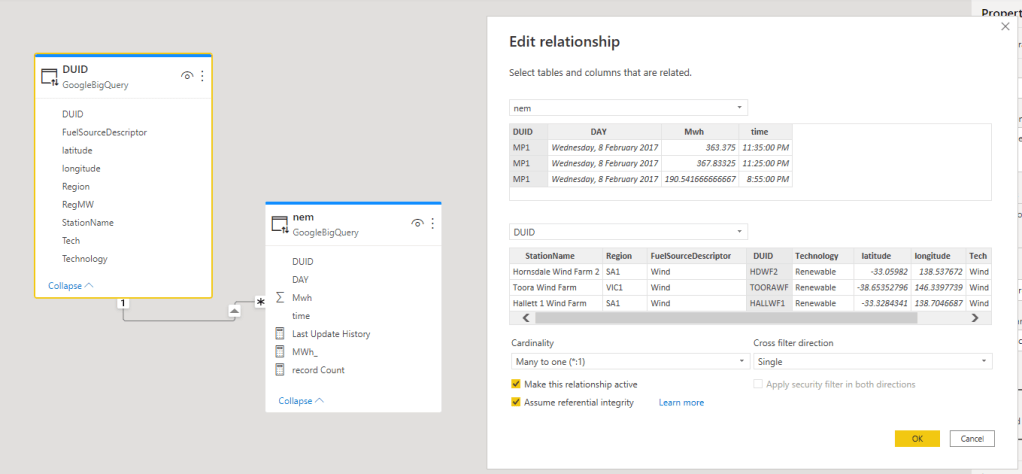

Composite Model

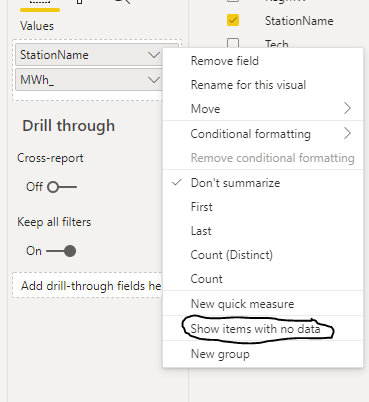

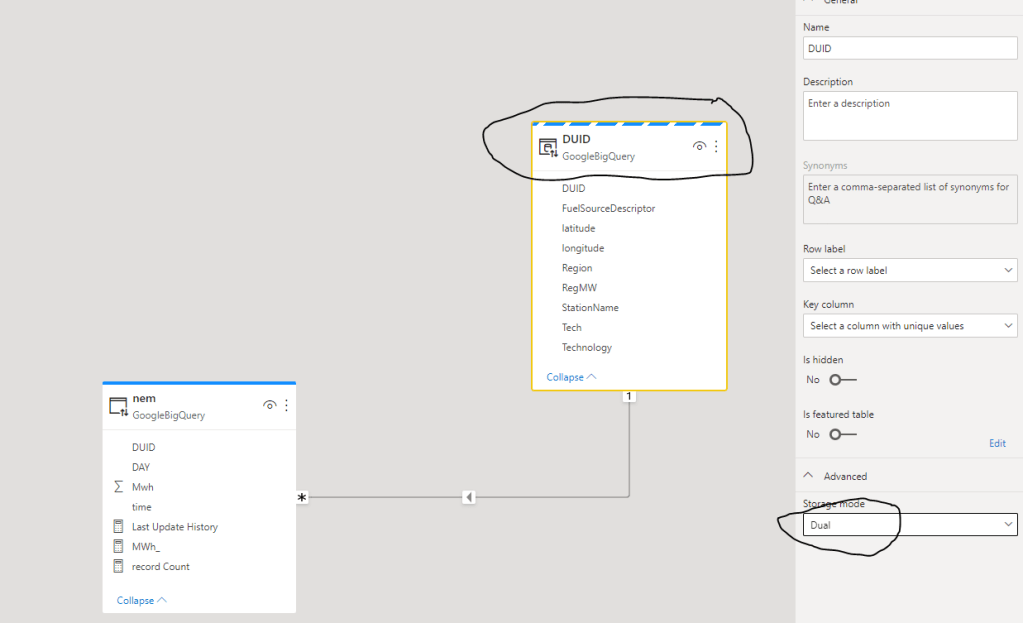

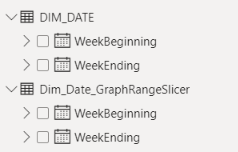

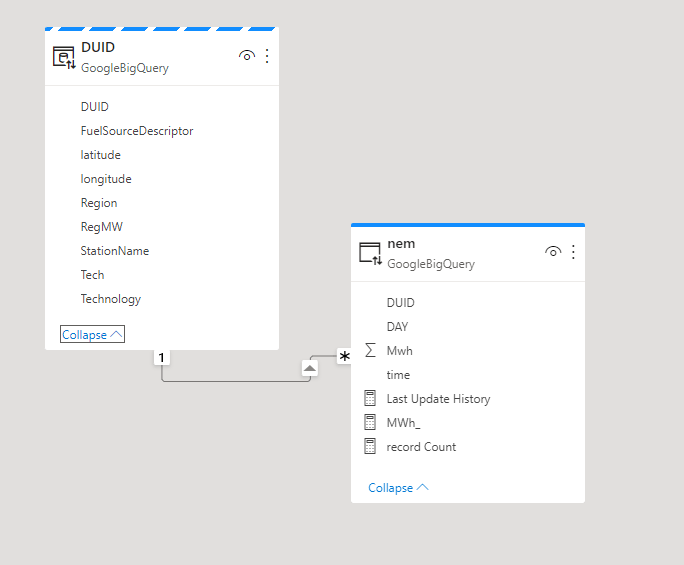

The Diagram give already an indication that the two dimension Tables are imported to the Local cache and that the relationship is a bit different than a “Normal” Relationship, I think the official term is weak relationship.

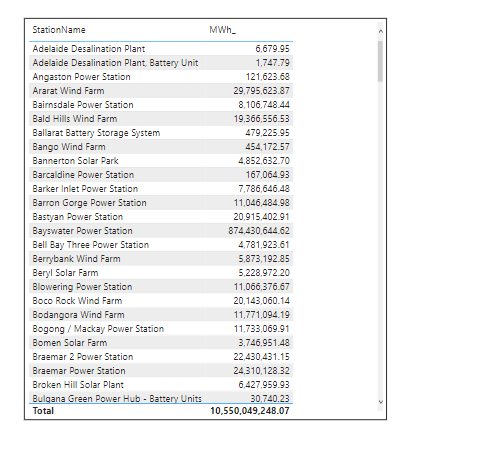

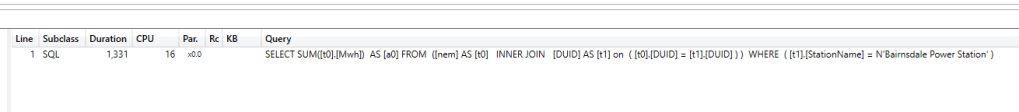

To Understand how this special Join Works, let’s try a simple Query, show me the total Mwh of coal Production

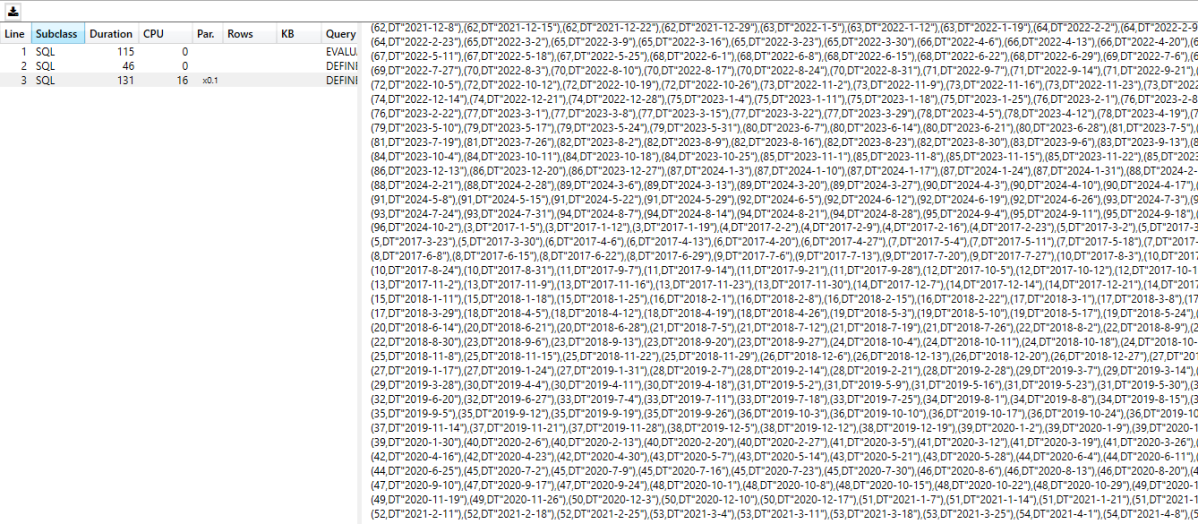

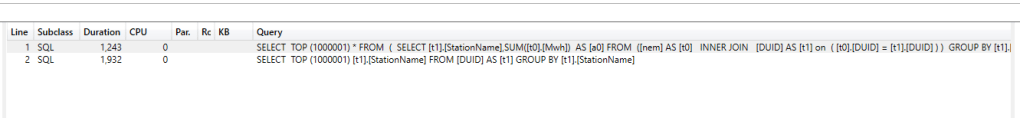

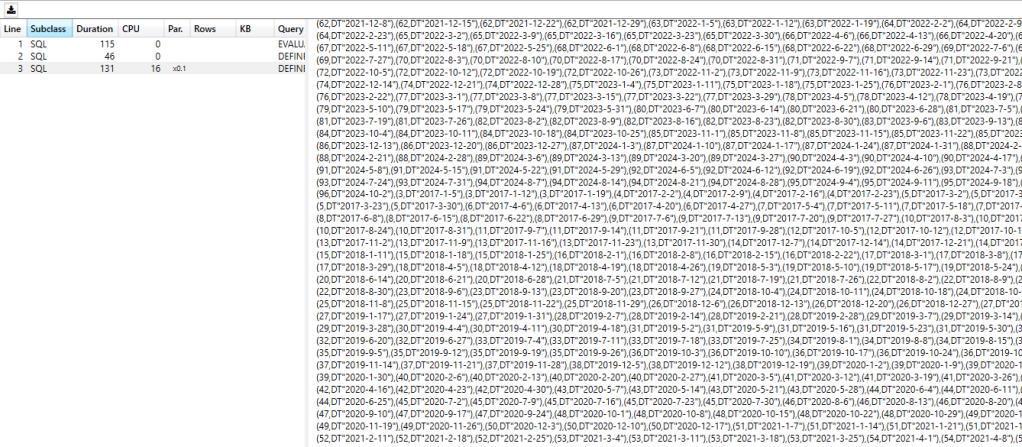

And here is the SQL Query generated by PowerBI Engine, at first sight it seems very weird !!!!

select `DUID`,

`C1`

from

(

select `DUID`,

sum(`Mwh`) as `C1`

from

(

select `DUID`,

`DAY`,

`time`,

`Mwh`

from `test-187010`.`ReportingDataset`.`UNITARCHIVE`

where `DUID` in ('APPIN', 'BRAEMAR2', 'BRAEMAR5', 'BW02', 'OAKY2', 'TARONG#1', 'LD03', 'MP1', 'BW01', 'MORANBAH', 'LYA3', 'MP2', 'KPP_1', 'TAHMOOR1', 'TARONG#3', 'LYA2', 'CPP_3', 'BW04', 'TNPS1', 'TARONG#4', 'LYA1', 'BW03', 'OAKYCREK', 'GROSV1', 'TARONG#2', 'LYA4', 'CPP_4', 'GROSV2', 'VP6', 'CALL_B_1', 'WILGAPK', 'GSTONE5', 'VP5', 'LOYYB2', 'CALL_B_2', 'WILGB01', 'GSTONE3', 'STAN-1', 'LOYYB1', 'CALL_A_4', 'DAANDINE', 'GSTONE6', 'YWPS1', 'ER01', 'GERMCRK', 'GSTONE2', 'STAN-2', 'YWPS2', 'ER03', 'MBAHNTH', 'STAN-3', 'YWPS3', 'TERALBA', 'GSTONE4', 'STAN-4', 'YWPS4', 'ER02', 'GSTONE1', 'LD02', 'ER04', 'TOWER', 'BRAEMAR3', 'LD01', 'BRAEMAR6', 'MPP_1', 'GLENNCRK', 'BRAEMAR1', 'LD04', 'BRAEMAR7', 'MPP_2')

) as `ITBL`

group by `DUID`

) as `ITBL`

where not `C1` is null

LIMIT 1000001 OFFSET 0

The Fact table in Direct Query mode contained only DUID, which is the code for the station name ( Coal Power plant, Solar Farm, Winds etc), the remote Source here is BigQuery, which have no idea what Coal means, as it is not a field defined in the table.

PowerBI Engine is smart enough to know which DUID belong to Coal as it is defined in the Dimension Table, get those items and injects them as a filter in the SQL Query, send the Query to the source system and get back the results

to be honest I did like this approach very much as usage based Database that I used Synapse Serverless and BigQuery, you pay a minimum of 10 MB by table, if you can avoid joins and pass everything as filters you save a bit of money.

Does it scale Though

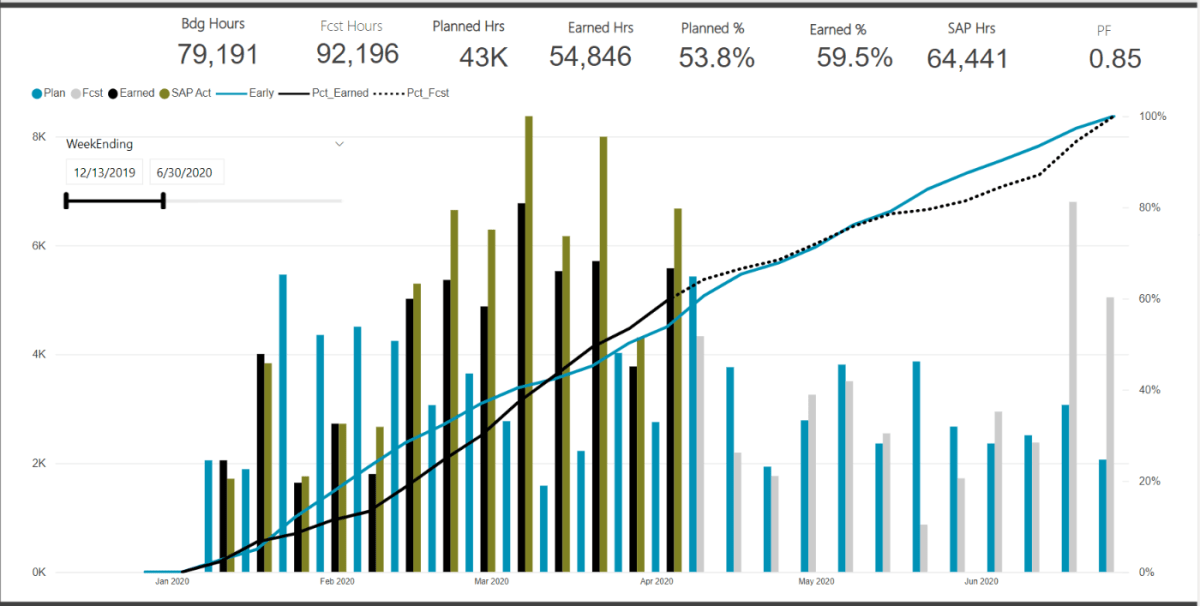

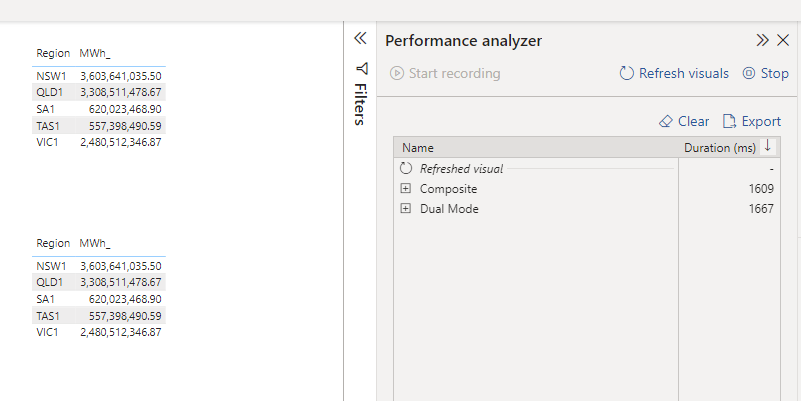

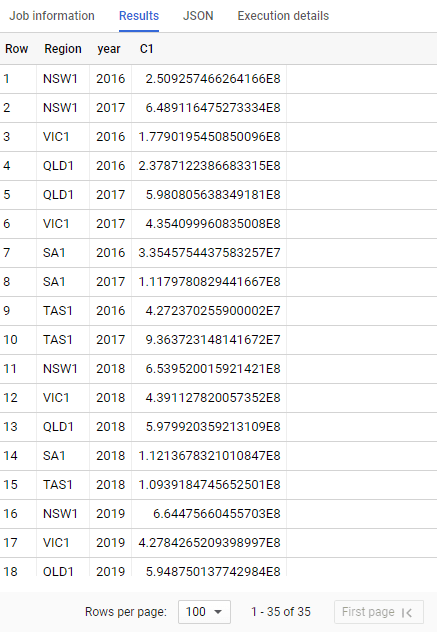

to test it, I built two exact same visual, one using composite and the other Dual

So Far, so good, nearly same performance ( it is hard to believe it is 80 millions rows, and the region is Tokyo )

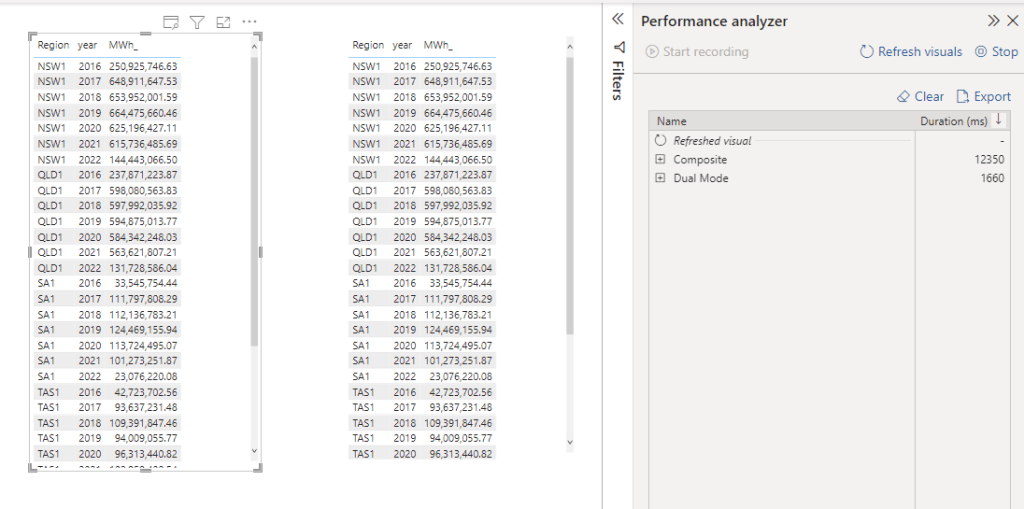

Now let’s add a date dimension, show me, Mwh per state per year

that’s not Good, 12 second is definitely not interactive, my first gut feeling, BigQuery slow down because of all those filters value, let’s check

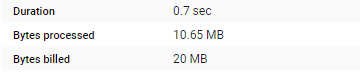

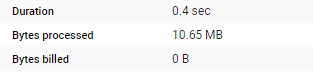

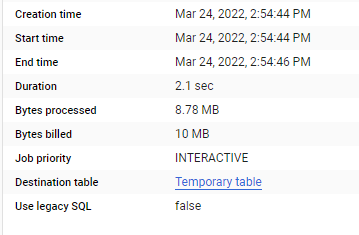

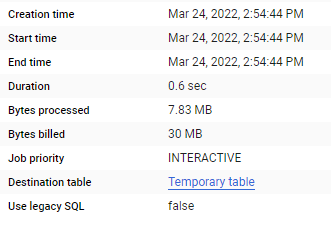

Composite Model 2.1 sec, notice it did billed only 10 MB ( I am using a materialized View on the base Table )

And Now Dual Mode, which make the joins at the source, that’s why I am billed for 30 MB ( Synapse Serverless do the same)

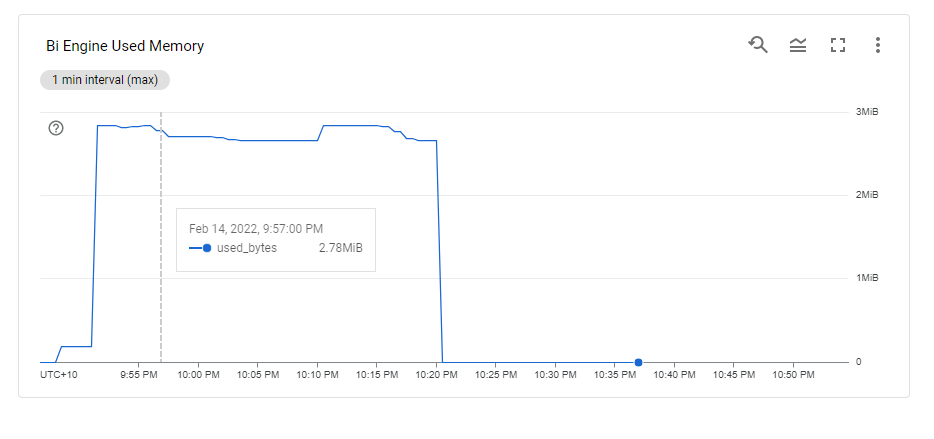

Data Transfer is the bottleneck

ok doing the join is faster, but still it does not explain the big difference observed in PowerBI. now let’s check the result set returned by every Query

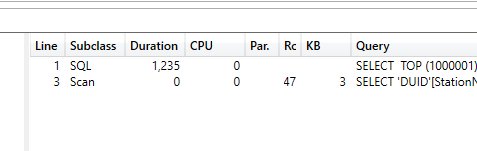

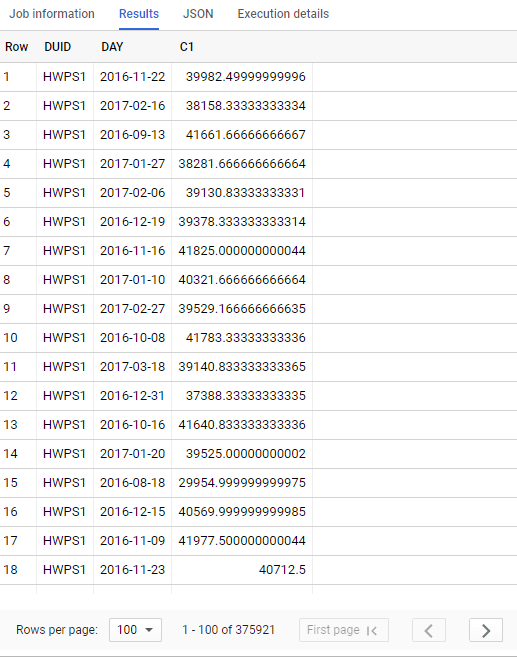

Dual Mode

35 rows, the same level of granularity as the visual

Composite Model

375K rows returned, yes, it is correct, PowerBI in composite mode don’t know anything about Year and Region, it has to get everything by DUID and Day level then group everything locally using the special join.

Downloading 370K will be slow and not very efficient for everything involved here, yes I know, you can add dimension year and region to the fact table, in that case we may just use flat table and call it a day. (I am joking you still need a dimension specially f you want to join another Fact)

so is Composite Model Bad ? absolutely not, but there is no free lunch, if you use it with dimensions that generate a small number of row it is fine, otherwise it can be slow, DWH are fast but data transfer is always a problem

How about Direct Query for PowerBI Dataset

it works the same way, two remote PowerBI Dataset are absolutely isolated from one another, PowerBI just see them as a separate Server !!!, and the join works by passing filter values around, Vertipaq is very fast though and all the datasets are located in the same space, I suspect it is less of a problem, But if you are not carefully enough with dimension with high cardinality, it may slow down the experience.

This is an example of a composite Model between two very small tables from two PowerBI Dataset, the DAX Query is passing day filter around, it is still fast, but the more you add, the slower it get.

We don’t use Composite Model at works as currently it needs a build permission for every user, and I did find sometimes rebuilding a model from scratch is much more practical than trying to decipher someone else disconnected table measure shenanigan, I think we currently use it only for special model to show a summary of all KPI from all existing Models grouped at a very high level.

The perfect use case for composite Model is if you have a Mature Enterprise Model and you need only to add a special dimension, like a different hierarchy then it is just perfect, anything else you need to be rather careful , you may end up with spaghetti Models all over the place.

What if ?

But I have to admit, the concept is very tempting and make you wonder, what if somehow we can just join between two arbitrary dataset using a real join, Vertipaq engineers are clever and they can figure it out, what if PowerBI service somehow accept a DAX Query and loaded not the whole Models but just the columns used for the Query , maybe even only the partition needed for the Query, what if in PowerBI service you will have different dataset just for storing data by department, and a lot of lightweight Logical Model in Direct Query mode.

Total separation of Storage, Compute and Semantic Model all using the same tables, can we just imagine how Vertipaq will look like in 2030 ?