The use case is very simple, sharing public data continuously and as cheaply as possible, especially if the consumers are in a different geographic region.

Note: This is not an officially supported solution, and the data can be inconsistent when copying to R2 , but it is good enough for public data

How to

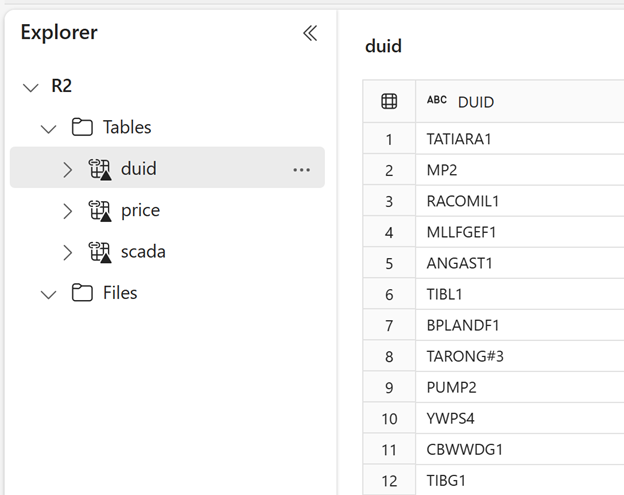

1- The Data is prepared and cleaned using Fabric and saved in Onelake

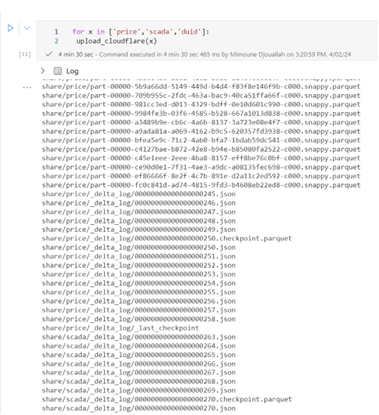

2- Copy the data to cloudflare R2 using code, as of today Shortcuts to S3 does not support write operation, although I did not test it, Dataflow Gen2 (data pipeline) support S3 as a destination, I used code as I had it already from a previous project, you pay egress fees for this operation and storage in R2 with transaction cost

3- Provide access token to users or make it public, you don’t pay for egress fees from Cloudflare to end users, but the throughput is not guaranteed.

Today, Fabric shortcuts requires list buckets permission, please vote for this idea to remove this requirement,

For example, I am writing public data in Fabric US-West and consuming it in Fabric Melbourne

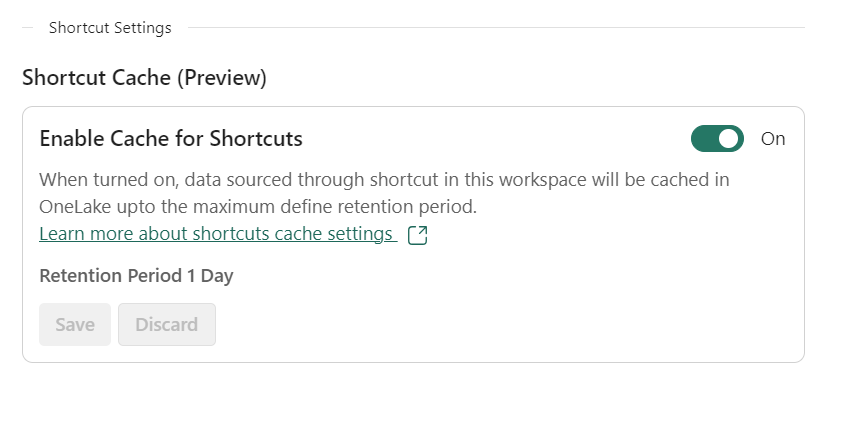

make sure you turn on cache for Onelake, it helps a lot of performance.

You can try it

You can try it yourself using this credential, they are temporary, and I may delete them anytime.

Access Key ID

3a3d5b5ce8c296e41a6de910d30e7fb6

Secret

9a080220941f3ff0f22ac93c7d2f5ec1d73a77cd3a141416b30c1239efc50777

Endpoint

https://c261c23c6a526f1de4652183768d7019.r2.cloudflarestorage.com