TL;DR: Fabric notebook uses a very nice functionality by mounting a remote Azure container as a “fake” local filesystem, this works as the OS Level, for a lot of programs it is just another folder, you can learn more details here

How it works

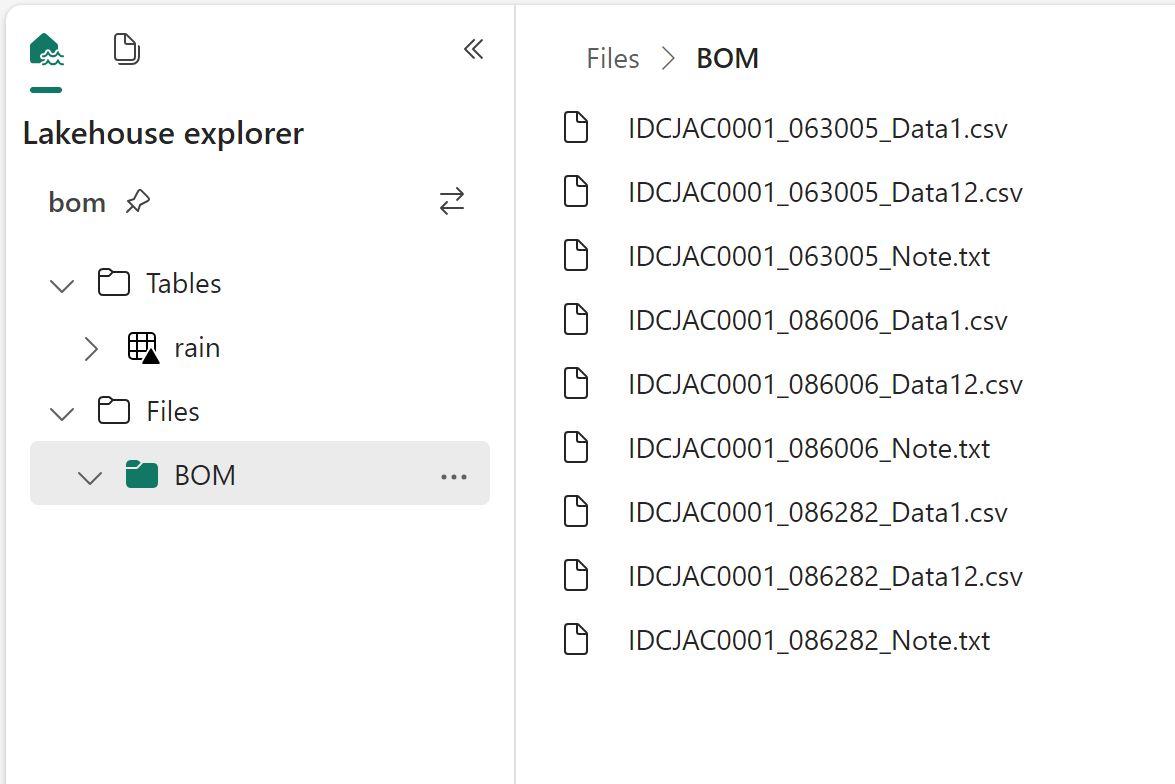

When you mount a Fabric Lakehouse , you get something like this

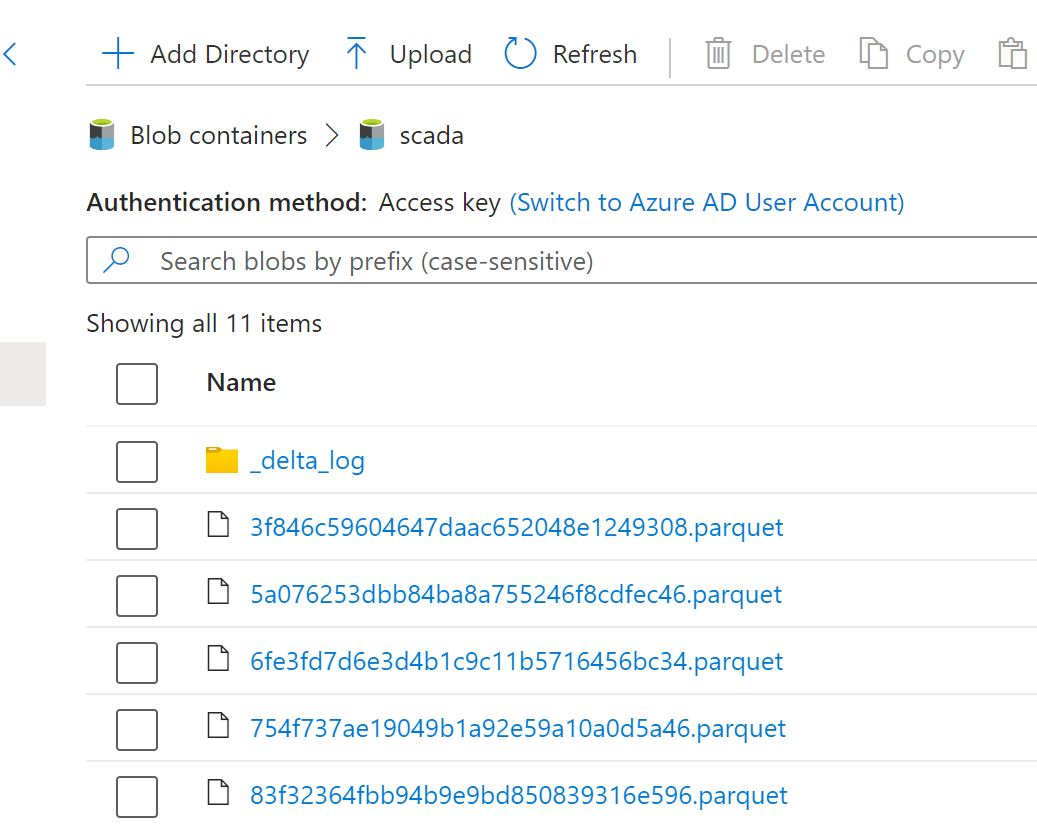

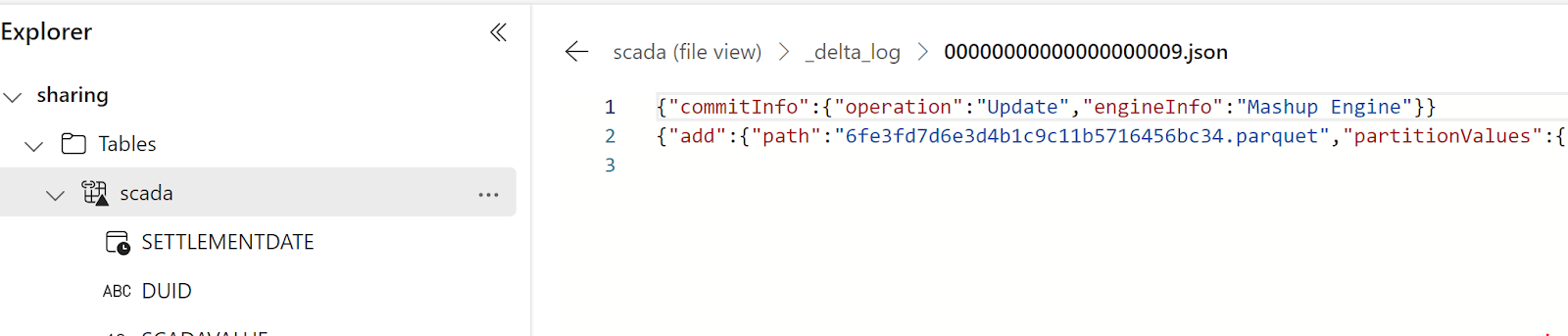

In the file section, you can store any files, the tables section is special, if you create a Delta Table, Fabric will automatically expose it to all Fabric Engines ( SQL, Vertipaq etc), the data is stored in an azure storage container.

When you run this command in the notebook !df -h, this is what you get

When a program send a command to delete, read, write etc, the system automatically translate those to an Azure storage API, it is not perfect, it is still not a real local filesystem, but so far it works well specially for read, I tested with 5 different SQL Engines and they all works fine ( DuckDB, Datafusion, databend,Glaredb and hyper) although the performance varied, I noticed that Hyper has some serious performance issues but I understand it may not be a priority for them to fix it 🙂

Writing DuckDB native file format

This one was very confusing to me when I used it the first time, my understanding; files in a remote storage are immutable, you can create new files or delete but not modify an existing one, but somehow it works.

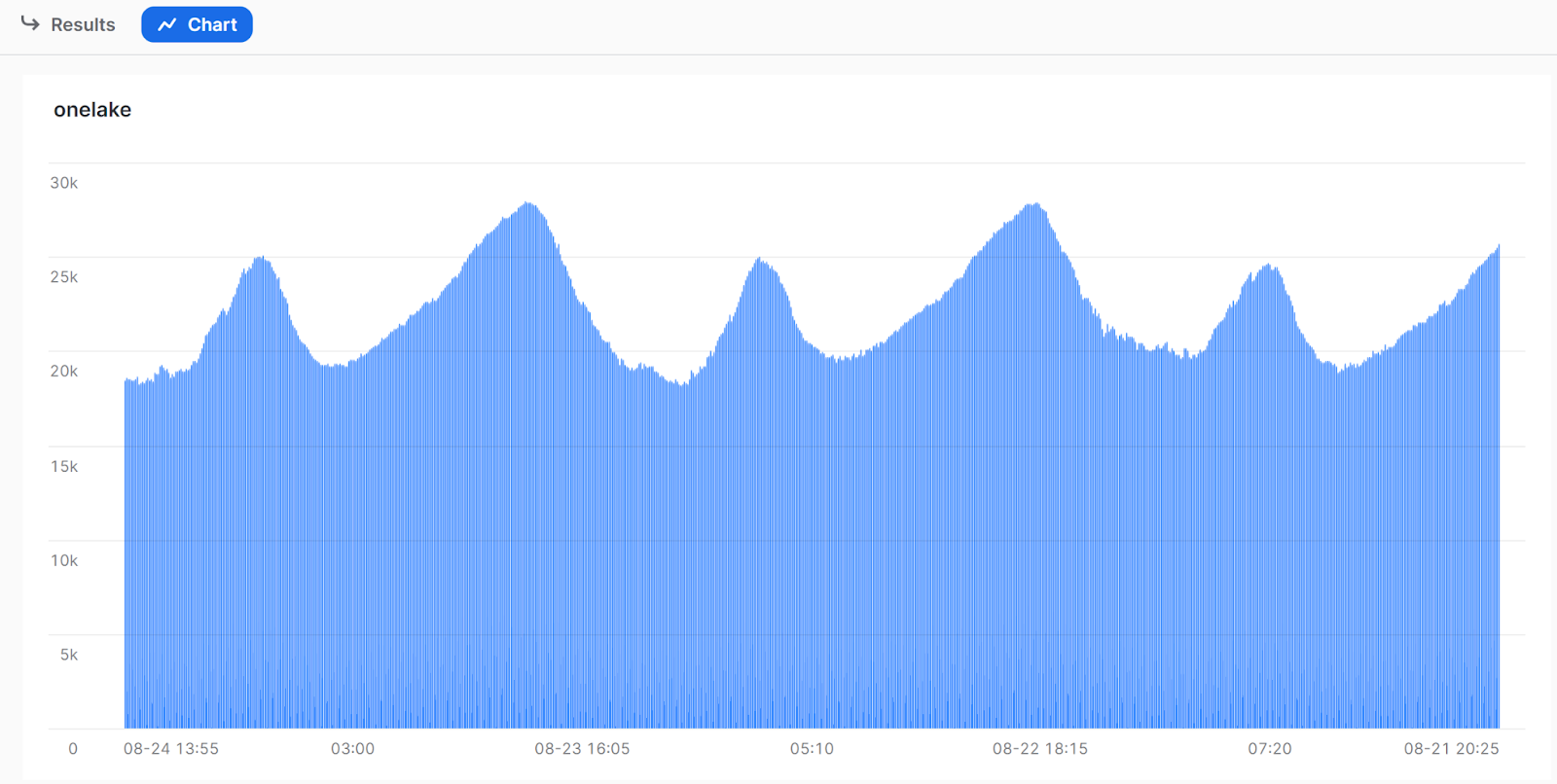

Open a remote DuckDB files in a write mode, the file is 25 GB

35 seconds is still slow, I thought it was supposed to be reading only some metadata !! I think that’s a DuckDB limitation.

Then I delete a random number from a table, it took 9 second to delete 85 million records ( this is an amazing performance)

Then I run a checkpoint and it works fine

BlobFuse2 will be a game changer

Currently Fabric notebook runtime uses BlobFuseV1 For OneLake, which as far as I can tell does not support any cache, although in my experience the throughput is rather good, it is still an object store and will never reach the speed of an SSD disk, but BlobFuse2 may well be the best thing that happen for Fabric Notebook, it has a native disc cache, and it works at the OS level, every program get a free cache, I hope the product team will upgrade soon.