This article captures 2 issues that are related and perhaps not clearly discussed or understood (not even by me): Level of Detail, and Transactional vs Account based tracking

Many cost tools, for right or wrong, are now almost entirely Control Account based. This leads to some conceptual issues where people have been use to managing data in a more transaction way.

Additionally, when we begin to establish defined control accounts, picking the right level of detail, and how changes are managed between Controls Accounts, requires a lot of creative accounting (thoughtful process mapping through all your systems & Digital Strategy)

The below is just a primer for a potential discussion. This is a post on what it means to pick a correct level of detail and what it really means to how you manage your costs.

Transaction Management

This is my wheelhouse, the way my brain primarily works when dealing with projects. List Management. Everything is a new record.

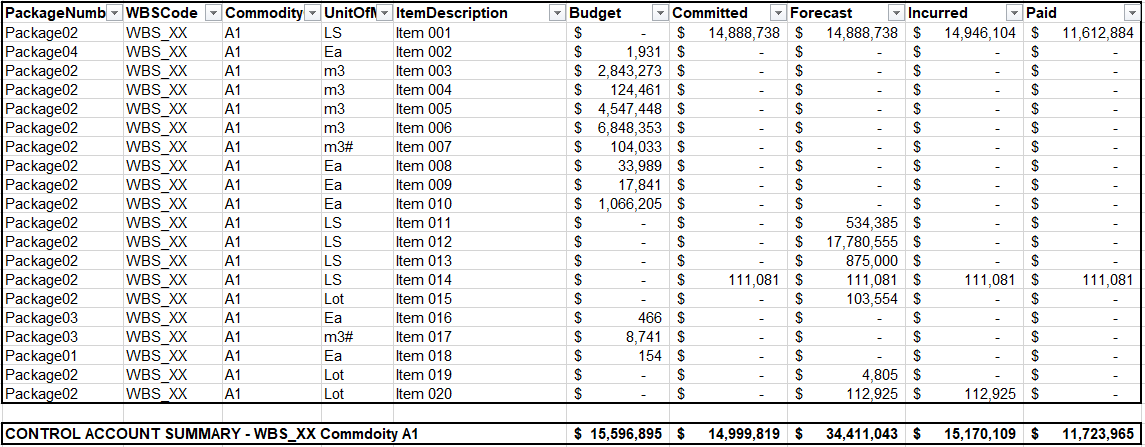

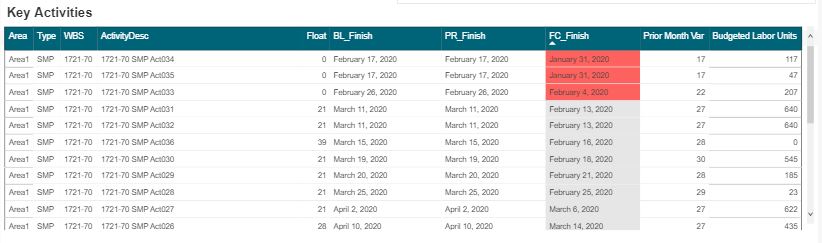

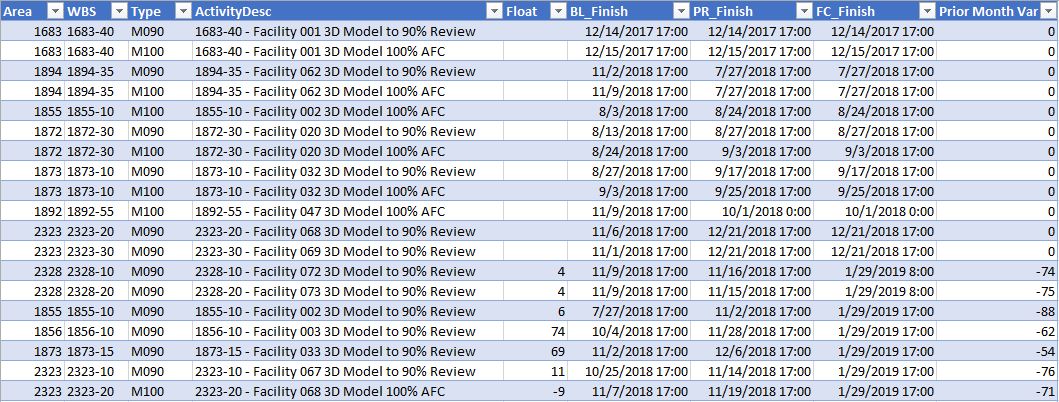

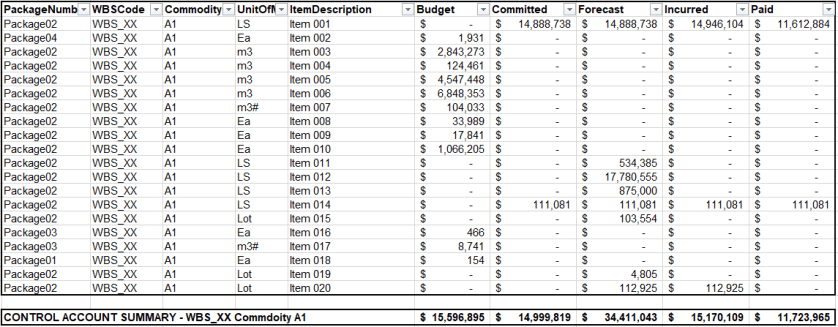

We have contracts with detailed line items, we want to retain our budget line items, each contract line item will have various columns for Committed, Forecasts, Incurred and Paid values. We manage these detail items in this way. Below is a example of how we really manage costs (and progress and deliverable). Excel (sharepoint, or a simple flexible database) provides an ideal solution for users to manage this detail.

However, the new age of cost tools want us to view projects at a more “control account” level. In the above example, I have created a control account to a WBS and Commodity code level of detail.

In the above example, we have 4 contractors working on this scope in various capacities. Although, its way more subtle then that, we actually only have 1 contract and 3 expected contracts. We have parsed our budget into what we expect to be 4 different contracts. Thus only 1 contract has a commitment, but yet we have a forecast (and budget) for all 4. Each contract will have a full detailed list of detail items that we will manage. We will items for specified growth, perhaps contingency, maybe a few site instructions. A transactional list!

Quantity Growth – new line or modify existing?

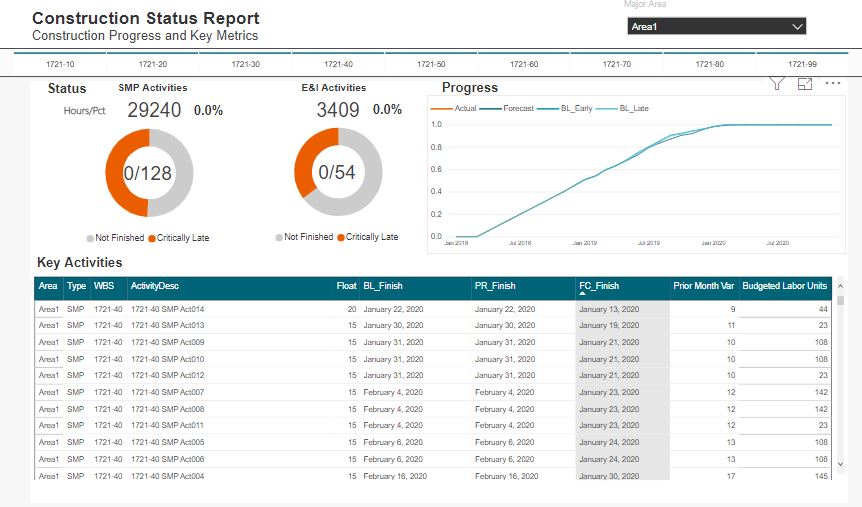

Here again is one of the conundrums of how we manage (specifically related to progress measurement. Consider a project with some concrete and steel.

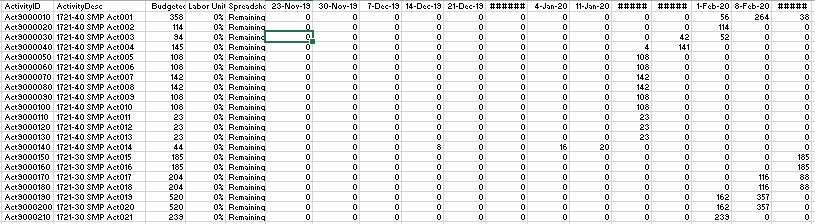

If we have a change in quantity for a foundation, where do we capture the change and what does it look like in our database. Too often, we look at this and manage it using a simple excel file – which can make the process easy. However, this is a very complex issue. If we add a line item and base our progress off committed quantities, we will have to update 2 line items with %’s. However, so many options exist to capture this.

And again, if instead this item is managed at the “control account” level, all we need is the total actual quantity, or simply the overall % for the control account. When you look at the above from a control account level, you capture all the detail, but yet for % management, you can disregard all the %’s to the details and only insert a % to the control account.

Which method is right, which method fits into your cost/progress system, which approach aligned to your specifications?

In the second method above, you loose the ability to calculate a specific % for variations. So again, we have taken just a simple issue, and created a complex nightmare. Obviously, we all solve these problems day in and day out. The issue here is again just to bring this topic to light and how the new range of cost tools may not be flexible enough to really capture what we do – nor should they! The real answer is as we all do now, some detail is managed inside excel and some abstraction ends up in the system.

Control Account Management

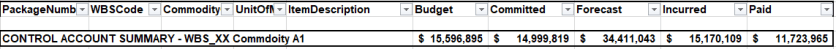

In the above, we have seen the importance of picking the right control account level of detail, but, we perhaps haven’t conceptualized what a controls account is in the first place. For “control account” management, we want to manage “SCOPE”. For this package of scope, we sum up all the detail coded to the same codes.

When we look at scope, it is much easier to compare against our estimate which was built to this level before we had to detail with specified growth, claims, site instruction and even contractor commitment. This is why we want control account management and why so many cost tools are forcing us down this path.

Whats the Problem?

In the above example, the “control account” is meaningless. We can not “manage” anything at the control account level. The control account is only a metric.

Instead we are going to manage our contracts in isolation. Each contract will have its own specifics and likely its own approvals when we modify a forecast or a commitment.

A solution to this conundrum is to split the above into 4 control accounts (or more). However, that creates a nightmare for everyone dealing with the new cost systems where creating cost accounts, loading budgets and costs is not straight forward. Doubly so as we haven’t even begun to discuss at what level we manage our time phased data.

All the new tools also allow us to manage “detail items”. Budget again, as soon as you start to push the level of detail of management into the detail items, you may as well make the detail item its own control account.

The Problem is – Whats the right Level of Detail?

You can run this problem down rabbit holes with how we manage engineering deliverables, how we manage progress items, manhours, quantity management, etc. Here I have just presented the problem related to strictly just a cost control level. But yet the dimensions for each of the items above is multiplied by each of the additional management datasets we also track.

Picking the right level of detail that goes into our cost tools, is more of an artform than it is a science.

My view is that we need easy flexible transactional capabilities from cost systems to ease the excel hell aspects. But yet at the same time, need to understand how the transactional records join up to perhaps a more formal CTR or Control Account level of detail.