TL;DR : Trying to simulate Fabric DWH Bursting using Fabric Notebook and DuckDB.

Setup

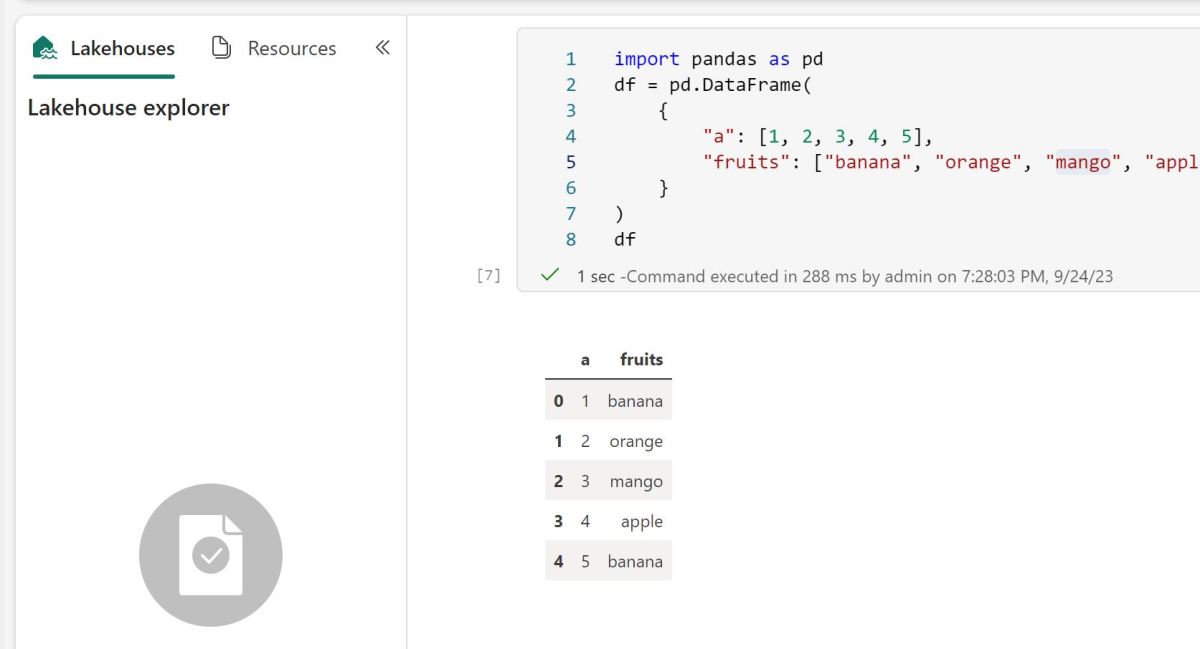

I am using TPCH_SF100 (600M rows) as an example, it does not matter you can use any dataset as long as you keep the same queries.

Fabric compute is rather serverless, you can’t customize it, except for Notebook where we can choose the number of CPU.

The test is very simple: run the same queries using a Fabric Notebook with different compute size, 4 Compute Unit, 8,16,32 and save the results in a OneLake.

To be very clear, Fabric SQL Engine is totally different from DuckDB, it does scale by adding more nodes, DuckDB is a single node and it scales by adding more CPU to the same node, but I think it is the same principle.

Scale is not linear.

- When increasing CU from 4 to 8, the duration decreased by 50 %

- From 8 CU to 16 , the Duration decreased by 24 %

- From 16 to 32, we reach a plateau, the duration stayed the same ( maybe OneLake throughput became the bottleneck)

I think it is fair to say regardless of the DB used at certain point adding more CPU will have diminishing returns.

Let’s now show the same graph but with $ values

For Me I will pick CU = 8 as the optimal solution, for the same cost as CU= 4 and half the duration, that’s what I call a manual bursting 🙂

Bursting in Fabric DWH is an automatic algorithm to *predict* the optimum compute unit for a particular workload.

I don’t know how Fabric DWH increases resource allocations but I suspect it just adds ( and removes) more nodes.

What’s not clear though, is how the system decided what’s the best solution, using the previous example; one can argue that for interactive Queries CU= 16 is the best option, yes you consume way more resources but it is faster. And for batch ETL jobs the only thing that matters is the lowest cost.

Smaller SKU can do very big thing

One of the most interesting implication of Bursting is that smaller SKU like F2 can do very big workload, F2 gives you 2 CU, but using the previous dataset as an example, it can scale just fine to 16 CU, but there is no free lunch, the total compute you are entitled to is still 2 CU X 24 Hours = 48 CU(h)

The challenge

Cost based optimization in Database is a very complex problem, adding dynamic resource allocation makes it even harder ,otherwise everyone will be doing it 🙂

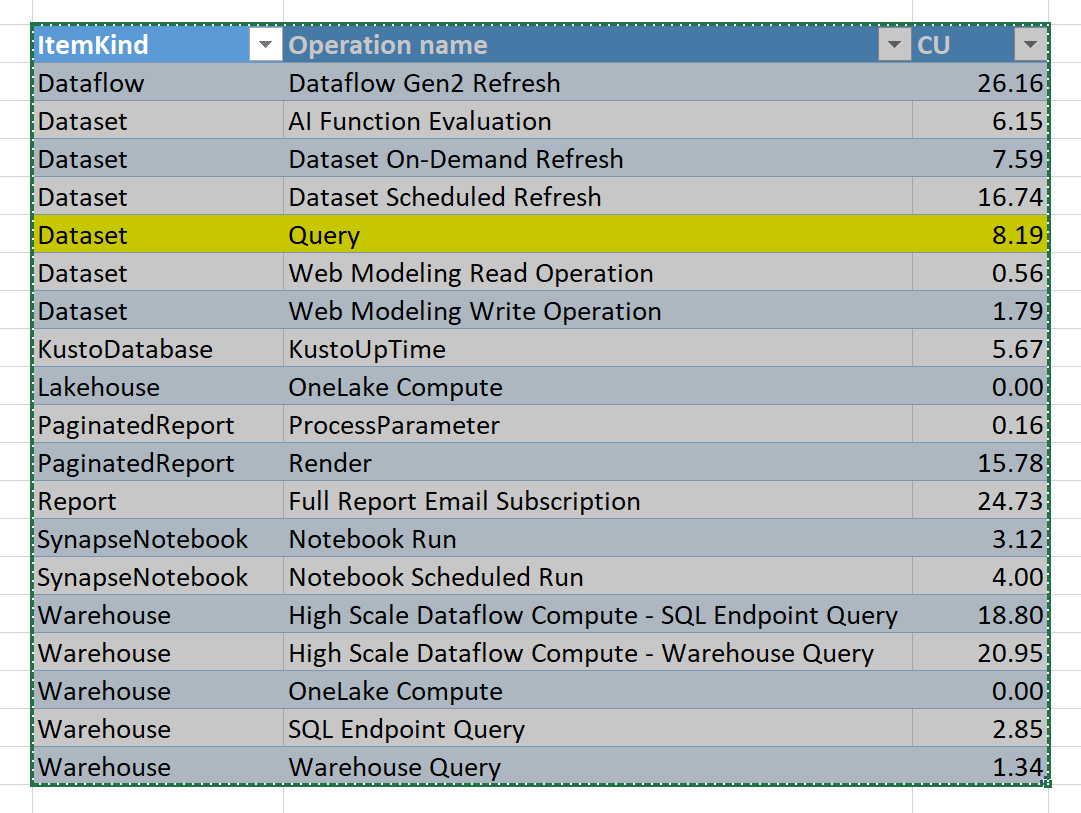

For me personally, SQL Engine so far seems to behave as expected, I did not see anything particularly extreme, some queries using as low as 2 CU for smaller workload. for a TB dataset bursting went till 72 CU.

What matters is continuous improvements.

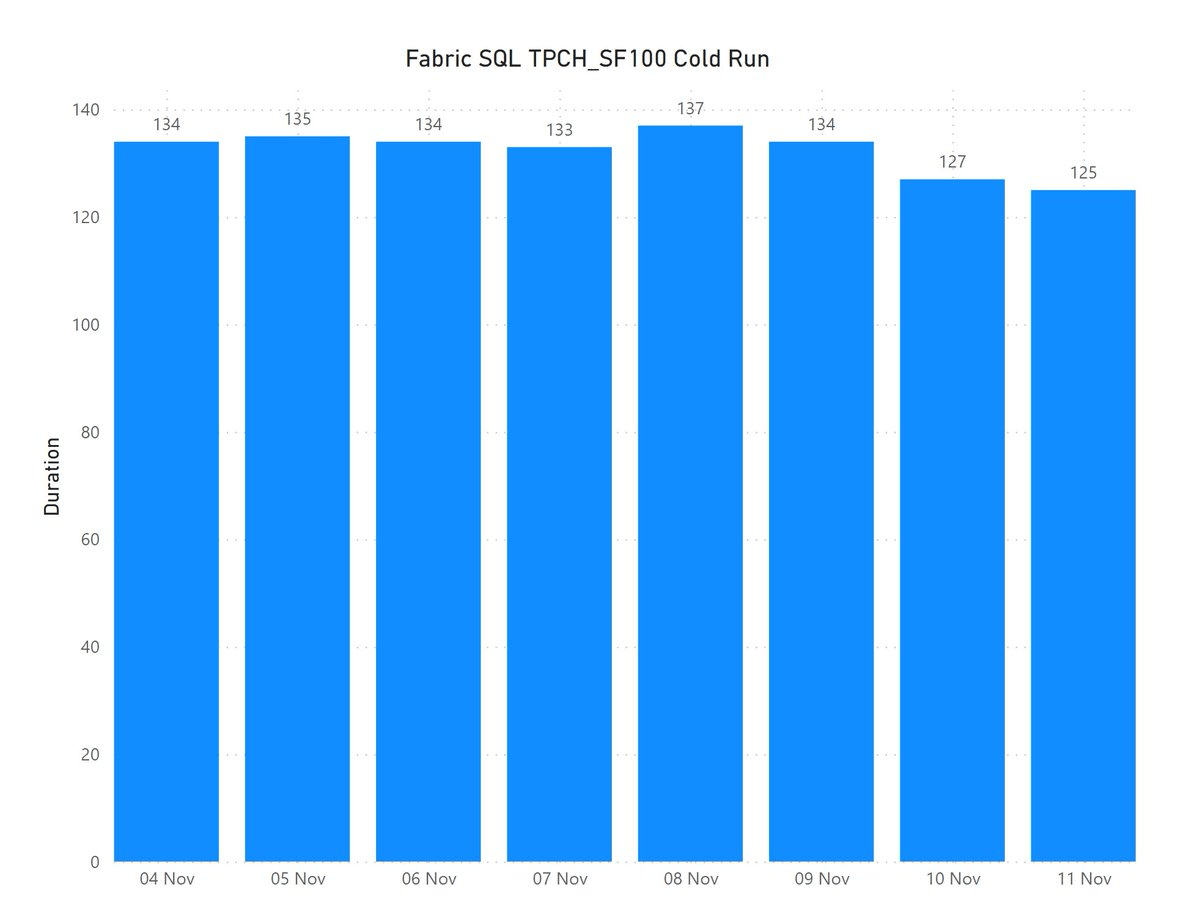

I think what matters the most is that the efficiency keep improving, today Fabric DWH TPCH_SF100 cold run finish around 2 minutes for 6 cents ( that’s pretty much the same performance as Cloud DWH X) which is great, but my hope in less than 5 years, the same workload will finish in less than 60 second for 3 cents.

And that’s the next big thing for Fabric, just efficiency improvement and bug fixes, nothing fancy.