TL:DR; Fabric Lakehouse don’t support ACID transactions as the Bucket is not locked and it is a very good thing, if you want ACID then use the DWH offering

Introduction :

When you read the decision tree between Fabric DWH and Lakehouse you may get the impression that in terms of ACID support the main difference is the support for Multi Table Transactions for DWH.

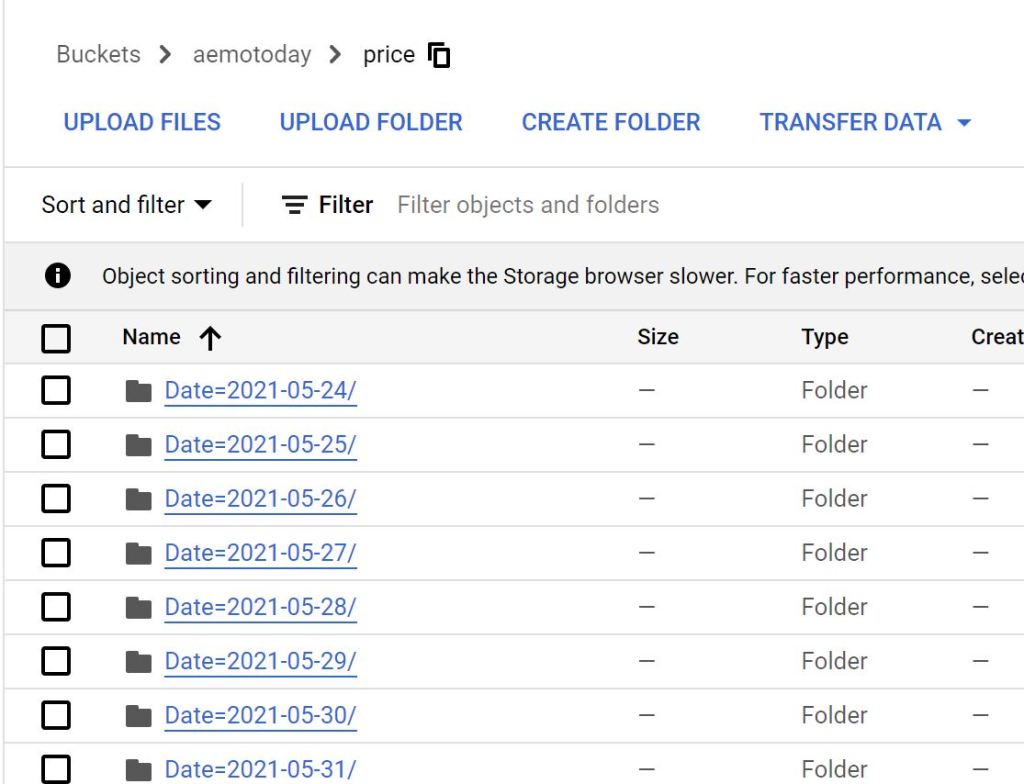

The reality is the Lakehouse in Fabric is totally open and there is no consistency guaranty at all even for a single table, all you need is to have the proper access to the workspace which give you access to the storage layer, then you can simply delete the folder and mess with any Delta table, that’s not a bad thing, it give you a maximum freedom to do stuff like Writing Data using different Engines or just upload directly to the “managed” Table area.

Managed vs unmanaged Tables

In Fabric, the only managed tables are the one maintained by the DWH, I tried to delete a file using Microsoft Azure Storage Explorer and to my delight, it was denied

I have to say, it still feels weird to look at a DWH Storage, it is like watching something we are not supposed to see 🙂

Ok what’s this OneSecurity thing

It is not available yet, so no idea, but surely it will be some sort of a catalog, I just hope it will not be Java based, and I am pretty sure it is read Only.

How About the Hive Metastore in the Lakehouse

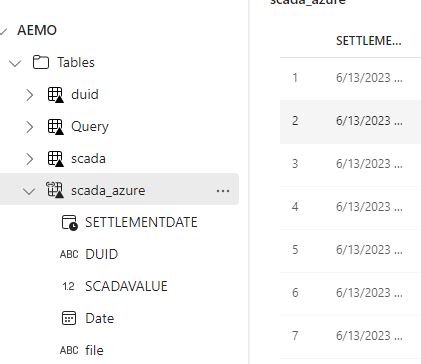

It seems the hive metastore acts like a background service when you open the Lakehouse interface, it scan the section of the Azure Cloud storage “/Tables” and detect if there is a valid Delta Table, I don’t think there is a public HMS endpoint.

What’s the Implication

So you have two options :

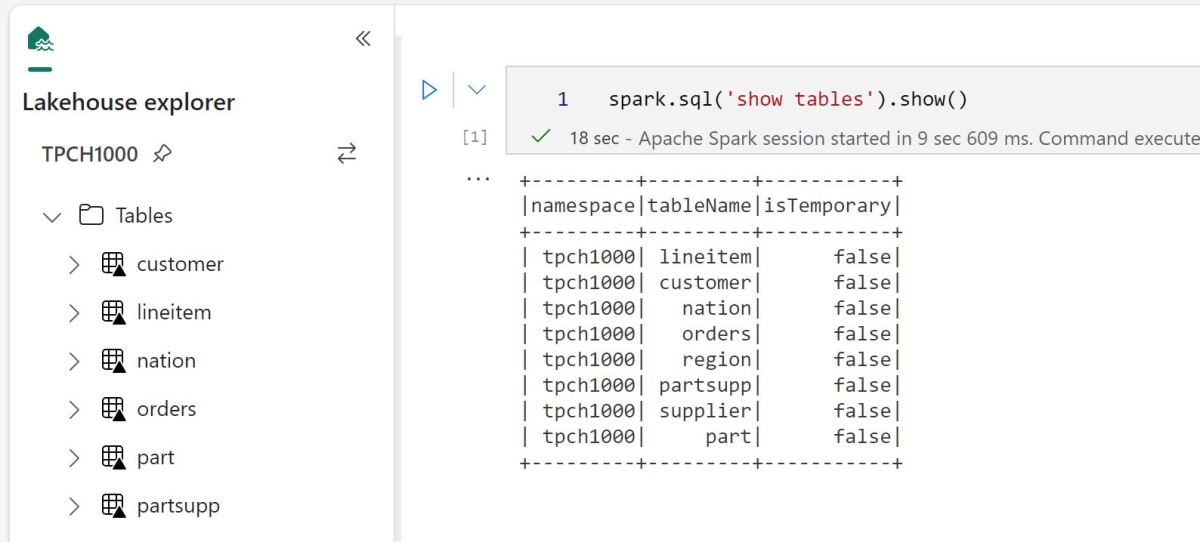

- Ingest Data using Fabric DWH, it is ACID and very fast as it uses a dedicated Compute.

I have done a test and imported the Data for TPCH_SF100 which is around 100 GB uncompressed in 2 minutes !!!That’s very fast.

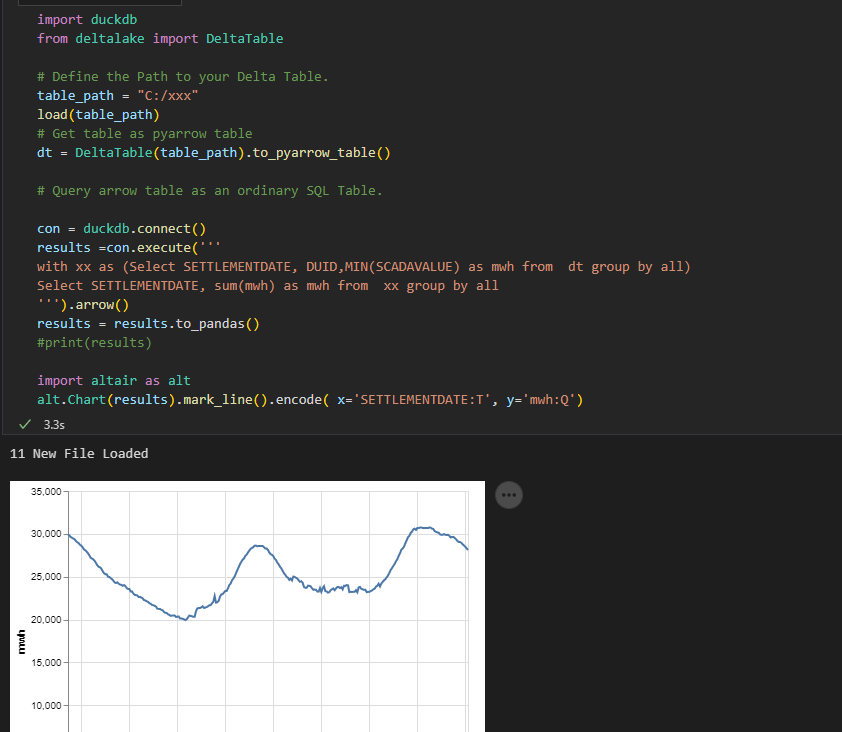

and it seems Table stats are created too at ingestion time, but you pay for the DWH usage. Don’t forget the Data is still in the Delta Table Format, so it can be read by Any compatible Engine ( when reading directly from the storage)

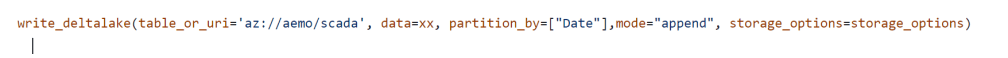

- Use Lakehouse Tables : Fabric has a great support for reading LH Tables( stats at runtime though), but you have to maintain the Data consistency by yourself, you have to run vacuum, make sure no multiple writers are running concurrently and that no one is messing with you Azure Storage Bucket, but it can be extremely cheap as any compatible Delta Table writer is accepted.

- Use Shortcuts from Azure Storage, you can literally build all your data pipelines outside of Fabric and just link it. see example here

It is up to you, it is a classical managed vs unmanaged situation, I suspect the cost of Fabric DWH will push it either way, personally I don’t think maintaining DB tables is a very good idea. But remember either way, it is still an Open Table Format.

Edit : 16-June -2023

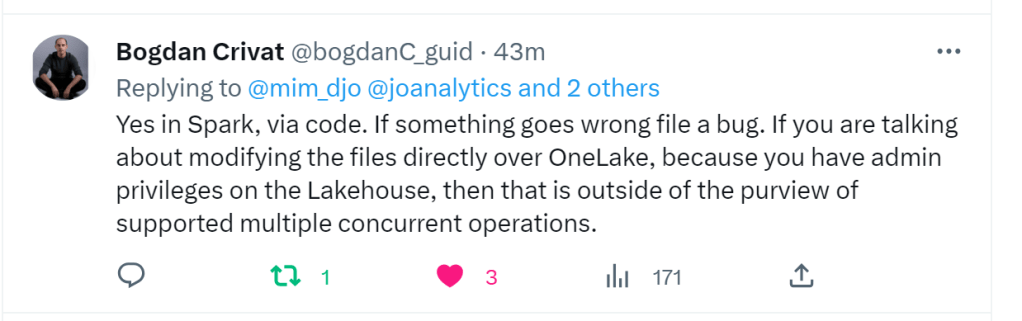

Assuming you don’t modify Delta Tables files directly from OneLake, and use Only Spark code, Multiple optimistics writers to the same table is a supported scenario , previously I had an issue with that setup but it was in S3, and it seems Azure Storage is different, Anyway this is from the Horse’s mouth 🙂